AI and national security: lethal robots or better logistics?

Posted By Michael Shoebridge on July 20, 2018 @ 06:00

ASPI’s artificial intelligence and national security masterclass held on 2 July gave some practical insights from experts and senior Australian officials about where AI might be used in national security.

The areas might surprise you, particularly if you were predicting swarms of killer robots fighting a war in the early 2020s. What might also surprise you is the number of uses, and the speed at which they’re likely to occur.

Artificial intelligence is less and more than the public debate says. Put simply, it refers to computer systems performing tasks that normally require human intelligence [1], such as decision-making, translation, pattern recognition and speech recognition. Early applications of AI are likely to involve things like optimising supply chains, helping digest data in jobs like visa processing, doing cyber defence against AI-enabled attackers—and helping strategic leaders and operational commanders make sense of confused but data-rich operational environments.

Closer to those killer robots are applications that might provide the edge to advanced missiles in the last stages of an attack, as well as countermeasures to systems like hypersonic weapons.

So, why are these particular areas likely first adopters of AI? One simple answer is because Australian agencies are already using AI to some extent—with a human decision-maker in the loop at one or more stages. A bigger reason is that the ‘business processes’ in national security agencies are similar to processes in companies that are already big users of AI.

Two other drivers for adopting AI are the availability of large datasets for analysis and a need to make quick decisions. If one of those drivers applies to an activity, it can make AI a sensible solution. If both apply, it’s a likely AI sweet spot, where the gains or capability advantages could be large. The big tech companies already use AI across their businesses; Google is an obvious example [2]. Big resource companies are also early adopters—with driverless mining trucks and trains.

Logistics and supply-chain optimisation may sound mundane, but they’re valuable areas to apply AI. That’s because modern systems are prolific generators of data that can increase efficiency and reliability. Simple examples are condition monitoring of systems on board navy ships, and predictive ordering of spares, weapons, fuel and food. AI tools can be trained on the data that the Department of Defence and its major contractors produce, with human decision-makers validating AI judgements and improving the algorithms.

Cybersecurity is another no-brainer for AI—because cyber is the land of large data and there’s already a problem with relying on people to process it. Moves towards widespread connection to the internet of devices and machinery other than computers and smartphones—as is happening with new 5G networks—make it essential for cybersecurity systems to be able to scale up to handle massive datasets and do so at machine speed. AI will empower human cyber-defenders (and cyber-attackers) to manage the enormous expansion of the attack surface brought about by 5G and the internet of things.

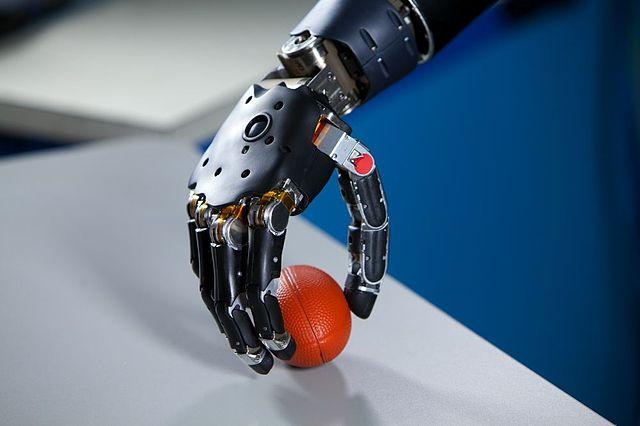

But let’s get closer to the battlefront. Why won’t AI give us killer robots now? Well, the good news is that, outside the hothouse innovation-driver of war, even adversaries who might have few reservations about gaining the operational advantage have similar practical concerns to those who—like our military and broader national security community—pay close attention to their obligations under domestic and international humanitarian law.

The key concerns are about trust. Fully autonomous systems using machine learning can be fast but dumb, or, more politely, smart but flawed. During the Cold War, the computer said launch, but it was overridden by humans who suspected something wasn’t right—and we’re here because of those human judgements. Recent examples of self-driving cars crashing and killing people are further demonstrations of the fragility of and flaws in current fully autonomous systems.

National-security and military decision-makers are unlikely to send off the first AI-driven autonomous lethal robot, mainly because they fear loss of control and the potential blowback from doing something risky—with that ‘thing’ maybe killing their own forces or civilians who are in the way.

So, human-in-the-loop or human-on-the-loop approaches are likely to be core to AI in national security: both mean that critical decisions are made by people, not machines. Secretary of Home Affairs Mike Pezzullo calls this the ‘golden rule’ [3] for AI in national security.

History tells us that deciding and acting faster on better information than your adversary are key to success. AI can help commanders make sense of confused and rich data feeds from networked sensors on ships, in aircraft, on land vehicles, and now on individual soldiers and unmanned systems.

AI can also help advanced missiles hit their targets. A number of anti-ship, anti-aircraft and air-to-ground missiles already have active seekers and pre-programmed countermeasures that work against defensive systems. AI-enabled missiles, though, will be able to make decisions on data gathered in flight about the actual countermeasures and defences on the target ship, aircraft, ground formation or base. The rapid data processing of AI could increase the hit rate and damage. Use against specific military targets avoids the autonomous killer robot problem.

Just as in cybersecurity, AI on the battlefield will be part of the classic tango of measure and countermeasure, with the balance constantly shifting between aggressor and defender.

This sketch about AI in national security shows one clear thing: it’s real and it’s already happening faster than most realise. Many applications won’t elicit hyped-up commentary about the next war, but they are nonetheless powerful and likely to be widespread.

That brings us to Australia’s chief scientist, Alan Finkel [4], and some of the people at the ASPI event. Given the speed of development of AI, urgent work is required to establish the ethical and legal frameworks that AI developers must use for applications involving decisions about humans. That work is for policymakers, legal advisers and ethicists, in consultation with the technologists.

I’d argue that our chief scientist should get on with leading AI development, with ethicists doing the ethics. Both types of work must be done now if they’re to guide our future uses of machine-enabled or even machine-made decisions. Let’s not leave it to others to develop AI. And let’s not leave it to AI to decide how we use AI—that is a job for us humans.

Article printed from The Strategist: https://aspistrategist.ru

URL to article: /ai-and-national-security-lethal-robots-or-better-logistics/

URLs in this post:

[1] performing tasks that normally require human intelligence: https://www.forbes.com/sites/bernardmarr/2018/02/14/the-key-definitions-of-artificial-intelligence-ai-that-explain-its-importance/#78f99aa94f5d

[2] obvious example: https://www.forbes.com/sites/bernardmarr/2018/04/09/the-amazing-ways-google-uses-artificial-intelligence-and-satellite-data-to-prevent-illegal-fishing/#5c77afc81c14

[3] calls this the ‘golden rule’: https://www.smh.com.au/politics/federal/top-official-s-golden-rule-in-border-protection-computer-won-t-ever-say-no-20180712-p4zr3i.html

[4] Alan Finkel: http://www.abc.net.au/radio/programs/am/chief-scientist-urges-regulation-of-driverless-cars/9942696

Click here to print.