Artificial intelligence isn’t that intelligent

Posted By Harriet Farlow on August 4, 2022 @ 06:00

Late last month, Australia’s leading scientists, researchers and businesspeople came together for the inaugural Australian Defence Science, Technology and Research Summit [1] (ADSTAR), hosted by the Defence Department’s Science and Technology Group. In a demonstration of Australia’s commitment to partnerships that would make our non-allied adversaries flinch, Chief Defence Scientist Tanya Monro was joined by representatives from each of the Five Eyes partners, as well as Japan, Singapore and South Korea. Two streams focusing on artificial intelligence were dedicated to research and applications in the defence context.

‘At the end of the day, isn’t hacking an AI a bit like social engineering?’

A friend who works in cybersecurity asked me this. In the world of information security, social engineering is the game of manipulating people into divulging information that can be used in a cyberattack or scam. Cyber experts may therefore be excused for assuming that AI might display some human-like level of intelligence that makes it difficult to hack.

Unfortunately, it’s not. It’s actually very easy.

The man who coined the term ‘artificial intelligence’ in the 1950s, cybernetics researcher John McCarthy, also said [2] that once we know how it works, it isn’t called AI anymore. This explains why AI means different things to different people. It also explains why trust in and assurance of AI is so challenging.

AI is not some all-powerful capability that, despite how much it can mimic humans, also thinks like humans. Most implementations, specifically machine-learning models, are just very complicated implementations of the statistical methods we’re familiar with from high school. It doesn’t make them smart, merely complex and opaque. This leads to problems in AI safety and security.

Bias in AI has long been known to cause problems. For example, AI-driven recruitment systems in tech companies have been shown to filter out applications from women [3], and re-offence prediction systems in US prisons exhibit consistent biases against black inmates [4]. Fortunately, bias and fairness concerns in AI are now well known and actively investigated by researchers, practitioners and policymakers.

AI security is different, however. While AI safety deals with the impact of the decisions an AI might make, AI security looks at the inherent characteristics of a model and whether it could be exploited. AI systems are vulnerable to attackers and adversaries just as cyber systems are.

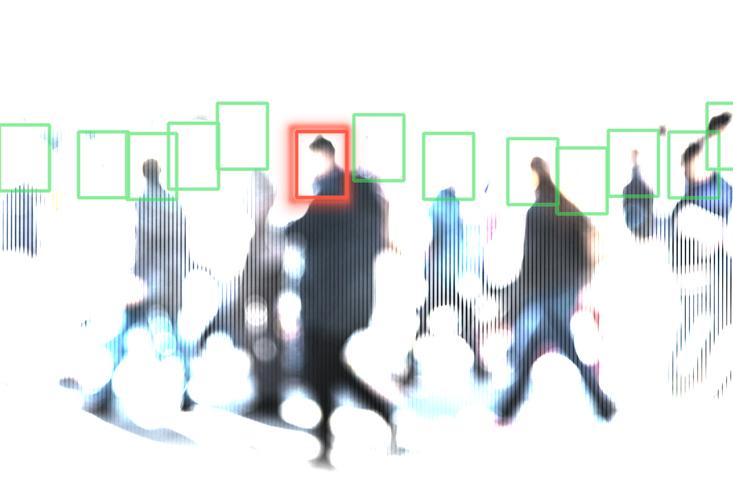

A known challenge is adversarial machine learning, where ‘adversarial perturbations’ added to an image cause a model to predictably misclassify it.

When researchers added adversarial noise imperceptible to humans to an image of a panda, the model predicted it was a gibbon [5].

In another study, a 3D-printed turtle had adversarial perturbations embedded in its surface so that an object-detection model believed it to be a rifle [6]. This was true even when the object was rotated.

I can’t help but notice disturbing similarities between the rapid adoption of and misplaced trust in the internet in the latter half of the last century and the unfettered adoption of AI now.

It was a sobering moment when, in 2018, the then US director of national intelligence, Daniel Coats, called out cyber as the greatest strategic threat [7] to the US.

Many nations are publishing AI strategies (including Australia, the US and the UK) that address these concerns, and there’s still time to apply the lessons learned from cyber to AI. These include investment in AI safety and security at the same pace as investment in AI adoption is made; commercial solutions for AI security, assurance and audit; legislation for AI safety and security requirements, as is done for cyber [8]; and greater understanding of AI and its limitations, as well as the technologies, like machine learning, that underpin it.

Cybersecurity incidents have also driven home the necessity for the public and private sectors to work together not just to define standards, but to reach them together. This is essential both domestically and internationally.

Autonomous drone swarms, undetectable insect-sized robots and targeted surveillance based on facial recognition are all technologies that exist. While Australia and our allies adhere to ethical standards for AI use, our adversaries may not.

Speaking on resilience at ADSTAR, Chief Scientist Cathy Foley discussed how pre-empting and planning for setbacks is far more strategic than simply ensuring you can get back up after one. That couldn’t be more true when it comes to AI, especially given Defence’s unique risk profile and the current geostrategic environment.

I read recently that Ukraine is using AI-enabled drones [9] to target and strike Russians. Notwithstanding the ethical issues this poses, the article I read was written in Polish and translated to English for me by Google’s language translation AI. Artificial intelligence is already pervasive in our lives. Now we need to be able to trust it.

Article printed from The Strategist: https://aspistrategist.ru

URL to article: /artificial-intelligence-isnt-that-intelligent/

URLs in this post:

[1] Australian Defence Science, Technology and Research Summit: https://www.adstarsummit.com.au/event/3ea1388a-66ec-4605-b5a7-1c53931b9341/summary

[2] said: https://cacm.acm.org/blogs/blog-cacm/138907-john-mccarthy/fulltext

[3] filter out applications from women: https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G

[4] biases against black inmates: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

[5] model predicted it was a gibbon: https://arxiv.org/pdf/1412.6572.pdf

[6] believed it to be a rifle: https://arxiv.org/pdf/1707.07397.pdf

[7] cyber as the greatest strategic threat: https://www.defense.gov/News/News-Stories/Article/Article/1440838/cyber-tops-list-of-threats-to-us-director-of-national-intelligence-says/

[8] as is done for cyber: https://www.landers.com.au/legal-insights-news/cybersecurity-in-australia-passed-and-pending-legislation

[9] using AI-enabled drones: https://www.onet.pl/informacje/onetwiadomosci/rozwiazali-problem-armii-ukrainy-ich-pomysl-okazal-sie-dla-rosjan-zabojczy/pkzrk0z,79cfc278

Click here to print.