ASPI has identified multiple attempts by the Chinese Communist Party (CCP) to manipulate Taiwanese voters by spreading disinformation and propaganda across social media. These influence operations primarily sought to undermine Democratic Progressive Party (DPP) presidential and legislative candidates. We assess they likely had a minimal impact on the integrity of the election results due to the resilience of Taiwan’s civil society. Partial credit must also go to the Taiwanese government for its efforts to raise public awareness of electoral interference, and a crackdown on other illicit activities, such as the spreading of fake polls.

We observed at least two China-originated threat actors seeking to interfere in this election. We believe one is likely linked to the CCP’s largest network of inauthentic social media accounts known as Spamouflage or Dragonbridge. This network evolved its online influence capabilities by utilising AI-generated avatars and amplified the contents of a document it called ‘The Secret History of (outgoing president) Tsai Ing-wen’. Another China-based actor we assess is likely linked to the first coordinated inauthentic behaviour (CIB) network identified in Meta’s 2023 third quarter adversarial threat report. This second threat actor conducted more sophisticated cyber-influence operations, including disseminating what it claimed were leaked Taiwanese government documents, and a fake DNA paternity test.

The key takeaway for other democracies with elections in 2024 is that they must not become complacent because the CCP is likely to replicate and improve its tactics in more divided and less resilient electorates where they may be more effective. We found that some of the inauthentic social media accounts trying to sway Taiwanese voters were also spreading anti-Bharatiya Janata Party (BJP) and anti-Indian government content, presumably to impact public sentiment before India’s elections this year. These accounts are primarily targeting audiences in India’s northeast region of Manipur, where India has suspected Beijing of aiding insurgent groups to destabilise the region.

The Spamouflage-affiliated accounts on X/Twitter (see image below), Facebook, Medium and Taiwanese blog sites called for a change in government and accused DPP presidential candidate Lai Ching-te of corruption and embezzling military procurement expenses. They named him and vice-presidential candidate and former representative to the US, Hsiao Bi-khim, as ’America’s pets’. The most common narrative spread by these accounts accused Lai and Hsiao of secretly signing a NT$2 trillion (US$65 billion) arms purchase from the US to help the DPP win the election. There are no authoritative reports of such a deal and the claim was almost certainly disinformation.

The posting patterns and timelines of these accounts suggested they were very likely linked to the Spamouflage network, which has links to Chinese law enforcement and coordinates with other agencies such as the Ministry of Foreign Affairs and the United Front Work Department according to internal group communications revealed by the US Department of Justice. Accounts targeting Lai and Hsiao were involved in previous Spamouflage campaigns targeting Chinese virologist Yan Limeng, Chinese businessman Guo Wengui, and Chinese dissidents. They also spread disinformation about Japan’s release of treated wastewater. These accounts tended to post between 9am and 9pm—Beijing business hours. While Spamouflage accounts were active throughout 2023, ASPI observed this network significantly increasing posts attacking the DPP after the KMT and TPP joint presidential ticket collapsed on 16 November 2023.

Spamouflage-affiliated accounts sought to harass DPP legislative candidates too, calling the DPP’s Lin Ching-yi, a ‘shameless’ politician. Accounts would reply en mass to her posts and troll or claim she was ‘taking advantage of men to get ahead’ (#林静仪借男人上位). These tactics were reminiscent of previous harassment and psychological targeting of women with Asian heritage which were intended to silence their public profiles and damage their mental health. Lin also faced negative news coverage throughout the year in the Straits Herald, a news outlet sponsored by the Fujian Daily, the official newspaper of the Fujian Provincial Committee of the Chinese Communist Party, which alleged falsely that she plagiarised her master’s thesis. Lin lost her seat to KMT challenger Yan Kuan-heng.

Other politicians targeted by the network included Lin Feifan, the former deputy secretary-general of the DPP, and Taiwan’s Foreign Minister, Joseph Wu, who Spamouflage accounts accused of sexually harassing female colleagues and having affairs. Posts targeting Wu would often use the #METOO hashtag and have images of him doctored to include women (see images below).

Starting on 2 January 2024, Spamouflage-linked accounts began flooding social media platforms, including X/Twitter, Facebook, Reddit, WeChat and TikTok, with posts about a 318-page document called ‘The Secret History of Tsai Ing-wen’ (蔡英文秘史), which falsely accused Tsai of corruption and promiscuous behaviour. The document was originally uploaded on Zenodo, an open-source data repository previously used by Spamouflage-linked operators to upload a document claiming Covid-19 originated from the US. Most of the document’s metadata had been removed but enough remained to indicate that the document was created by ‘WPS 文字’, a word processor developed by Chinese software company Kingsoft and commonly used only in mainland China. To provoke further online attention, a link to the document was spammed to prominent Chinese-language online commentators.

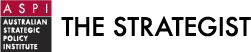

On YouTube, Spamouflage-linked channels posted at least 490 videos referencing the ‘secret history’ document between 4 January and 10 January before YouTube suspended all the channels. As noted by the Taipei Times, these videos involved the use of AI-generated content and some videos had been edited using Capcut, a software developed by ByteDance. In addition, ASPI found other companies possibly complicit in creating these videos. At least one video appeared to have used a ‘speaking portrait’, a photorealistic AI-generated avatar created by D-ID, a US-based software company. ASPI is not suggesting that D-ID knowingly cooperated with Spamouflage-linked operators. However, the presenter used in the video (see images below) requires users to have a paid subscription to D-ID’s platform.

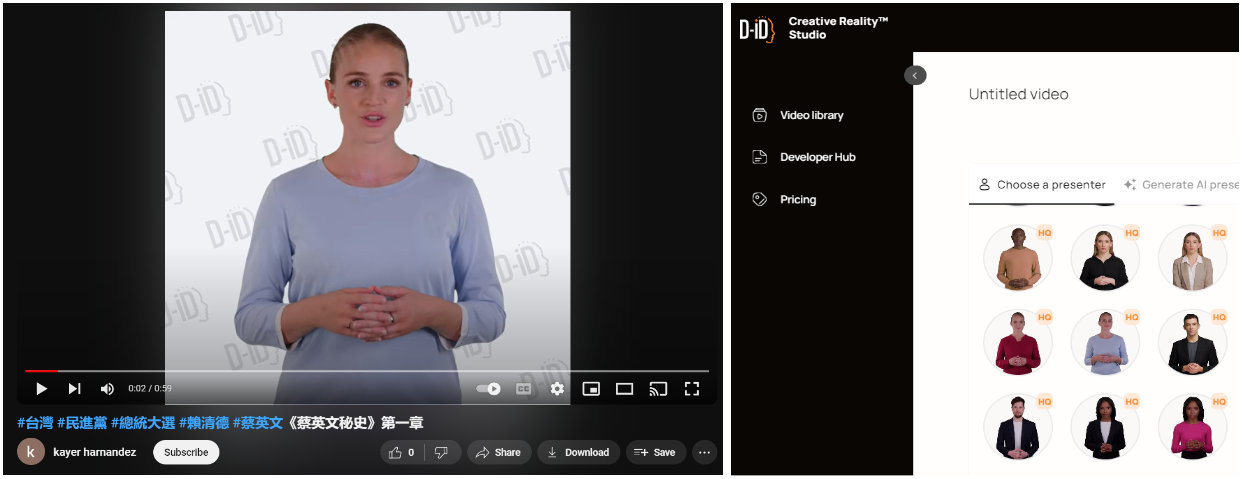

Another video posted by Spamouflage accounts appeared to have been generated with the Weta365 app (see images below) launched by Mobvoi, an AI company founded by ex-Google scientist Li Zhifei with funding from Sequoia Capital China, Google and Volkswagen. One of the case studies on Weta365’s website shows the application being used by Chinese police officers for training videos, which implies that there may be deeper cooperation between Mobvoi and Chinese security or intelligence services.

The CCP is now comfortably leveraging AI-generated content as a cornerstone in its influence operations and propaganda arsenal. In late 2023, ASPI observed a similar but distinct China-originated coordinated inauthentic influence campaign on YouTube using entities and voiceovers generated by AI to promote narratives such as China is winning the US-China technology war. This network of YouTube channels attracted just under 120 million views and 730,000 subscribers. With Spamouflage adopting similar tactics, the possibility of the network amassing significant online audiences with AI-generated content is a concerning development, especially outside closely monitored election periods.

In addition to Spamouflage activity, ASPI observed another likely CCP-linked online threat actor seeking to interfere in Taiwan’s election that was more targeted and operated more sophisticated personas. On 17 December 2023, an anonymous user uploaded an alleged leak of Taiwanese government documents on the hacking crime forum, BreachForums. One of the documents claimed Taiwanese military intelligence funded a podcast called ‘Complicated Things in a Nutshell’ (繁事簡單說) as part of Taiwan’s media warfare against the mainland. The BreachForum’s post was then shared at least 82 times on X/Twitter, before HK01, a Hong Kong-based online news site launched by pro-Beijing businessman Yu Pun-hoi, was said to have been emailed similar documents and reported about them on 4 January 2024.

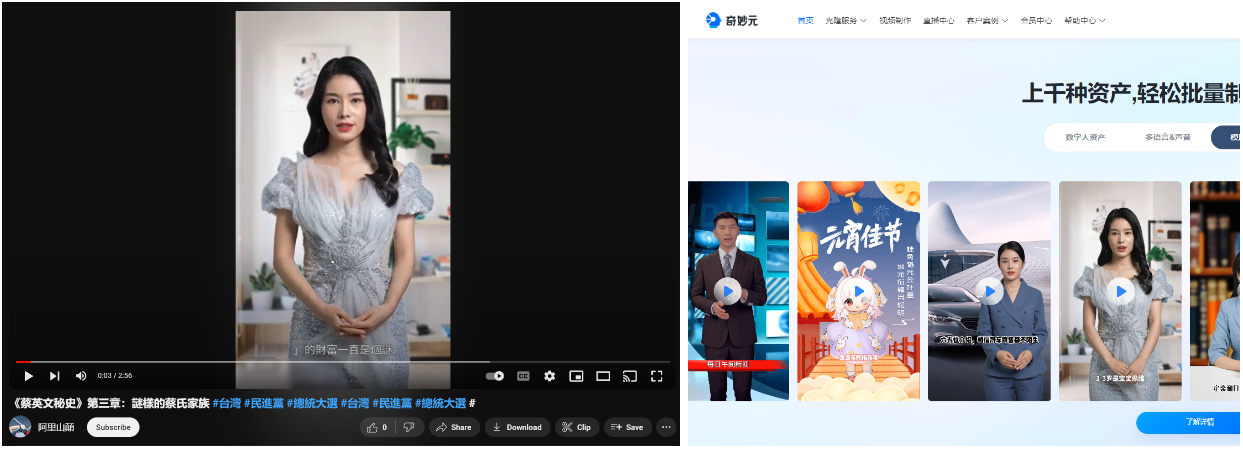

On 7 January 2024, another anonymous BreachForum user named ‘jt53ur39’ uploaded a fake DNA test purporting to show that Lai Ching-te had an illegitimate child. We agree with Radio Free Asia’s Fact Checking Laboratory’s assessment that the DNA report was likely fake. The numbers representing the allele sizes were not properly aligned down the columns and there was no conclusion in the interpretation box that is expected in typical reports. Jt53ur39’s forum post was first shared on 4chan from an IP address originating from Thailand. ‘Lai Ching-te’s illegitimate son’ (賴清德私生子) was then mentioned nearly 20,000 times on X/Twitter, Facebook, YouTube and other online forums by inauthentic accounts that had also previously shared links to the alleged Taiwanese government document leaks. The number of such posts peaked on 12 January, the eve of the election, with over 13,000 mentions that day alone. This coincided with reporting on the allegation by the China Times, a Taiwan-based newspaper that reportedly takes instructions directly from the CCP’s Taiwan Affairs Office.

We assess that the network of inauthentic social media accounts sharing links to the Taiwanese government document leaks and the fake DNA test is likely linked to a China-based CIB network targeting India, Tibet and the US. Almost all of the X/Twitter accounts had retweeted @NickJonas154141, an account identified as being acquired by a China-based CIB network in Meta’s 2023 Third Quarter Adversarial Threat Report. Like that CIB network, all the accounts in the new network posed as Indian citizens and posted anti-BJP or anti-Indian government content that primarily targeted users in Manipur.

The account, Ayush Satyal, identifies as being located in Nepal and has over 100 followers but appears to have stolen the profile image of Kapil Gnawali, a Nepal-based engineer not linked to the China-based network. Ayush Satyal frequently uses the #Manipur hashtag, retweets NickJonas154141, shares posts about Lai’s DNA test on BreachForums (which is the only Chinese-language post on his profile) and calls for Indians to never vote for the BJP because of its activities in the Manipur region (see images below).

It’s difficult to assess the effectiveness of the CCP’s influence operations in these elections. More research could be collected on Taiwanese sentiments leading up to the election and how specific CCP-fueled narratives influenced opinions. Taiwan elected DPP candidates Lai and Hsiao, which suggests the CCP was ineffective in swinging the vote. But the DDP lost its majority in Taiwan’s parliament, the Legislative Yuan. That could in part be due to the CCP’s amplification of anti-DPP content but it’s more likely to be Taiwanese people expressing dissatisfaction with the DPP’s domestic policies and the increased cost of living.

The prevalence of two known China-based networks, Spamouflage and the India-focussed CIB network, on social media platforms shows that X/Twitter is failing to ensure online safety during a year of important elections. Of all the X/Twitter accounts listed in Meta’s 2023 Q3 Adversarial Threat Report as having been acquired by a China-based CIB network, only one out of seven has been suspended by X/Twitter. Other platforms such as YouTube and Meta are being proactive and taking down state-backed influence operations when they are detected. However, the research community still lacks access to archives of these takedowns. That access would enable independent investigators to study new threat actors and force platforms to be more transparent in content moderation decisions.

The burden of preventing electoral interference must also extend to emerging Western generative AI companies. A laissez-faire approach to online safety could damage democracy and lessen public trust. OpenAI’s announcement of a series of new initiatives to protect the integrity of elections is a welcome start but OpenAI should also publicly release threat reports of malicious state and non-state actors misusing their products as do social media platforms. Companies like Synthesia and D-ID, whose products are being co-opted by authoritarian intelligence and military services, must be more rigorous in their due diligence regarding their clients, or governments should consider making generative AI companies liable for defamation or facilitating electoral interference.

Likewise, Western governments and companies need to reassess their foreign direct investments into China’s AI industry if the products they are funding, such as Mobvoi’s Weta365 generative AI app, are likely to be used in political warfare operations against democratic states. There are no low-risk investments in China’s dual-use AI sector. Any Chinese AI company that is likely to succeed with a high return on foreign investment, will at the same time have the capabilities that the CCP desires to modernise its military or intelligence services and will be required to cooperate because of Beijing’s National Intelligence Law.

Democracies conducting elections later this year, such as India, Indonesia, the UK and the US, should strengthen their relationship with Taiwan and the new Lai Administration. India and Taiwan should share intelligence on CCP threat actors active in the recent Taiwanese election and jointly investigate the China-based inauthentic social media accounts targeting India’s Manipur region. Taiwan should share an intelligence memo about a December 2023 meeting between senior Chinese leaders from the Propaganda Department, the Ministry of State Security, the Ministry of Defence and the Taiwan Affairs Office to consolidate their influence operations targeting the Taiwan election.

The success of Taiwan’s whole-of-society counter-disinformation and propaganda efforts is primarily due to the efforts of civil organisations, including DoubleThink Lab, the Information Environment Research Center, the Taiwan FactCheck Center, Cofacts, Kuma Academy and many others. These organisations often investigate malicious online activity before government agencies can respond and counter false narratives in real time. They are themselves the targets of the CCP’s propaganda apparatus.

Their collaborative approach demonstrates that defending against disinformation and electoral interference is not something organisations or states should face alone.