ASPI’s decades: Cyberattacks, deep fakes and the quantum revolution

Posted By Graeme Dobell on September 27, 2021 @ 06:00

ASPI celebrates its 20th anniversary this year. This series looks at ASPI’s work since its creation in August 2001.

The cyberworld is a place of falsehood and fights, but also of ideas and identity and extraordinary masses of information.

ASPI’s International Cyber Policy Centre [1] was created to grapple with the strategic challenges [2] of a new realm.

When Fergus Hanson took over from Tobias Feakin as the second head of ICPC, he studied Australia’s offensive cyber capability—an ‘attack’ ability publicly confirmed by the prime minister in 2016.

Hanson and Tom Uren wrote that the government had been ‘remarkably transparent [3]’ in declaring the capability would be used to ‘respond to serious cyberattacks, to support military operations, and to counter offshore cybercriminals’.

In November 2016, the government said that the capability was being used to target Islamic State. In June 2017, Australia became the first country to openly admit that its offensive cyber capabilities would be directed at ‘organised offshore cyber criminals’. In the same month, the formation of an Information Warfare Division within the Australian Defence Force was revealed.

Hanson and Uren quoted an Australian government definition of offensive cyber operations as ‘activities in cyberspace that manipulate, deny, disrupt, degrade or destroy targeted computers, information systems, or networks’.

Any offensive cyber operation [3] in support of the ADF would be governed by military rules of engagement, Hanson and Uren wrote:

The full integration of Australia’s military offensive cyber capability with ADF operations sets Australia’s capability apart from that of many other countries. Only a very limited number of states have this organisational arrangement, which provides a distinct battlefield edge that with modest additional investment would give Australia an asymmetric advantage in a range of contexts.

Because offensive cyber operations were relatively new, Hanson and Uren recommended careful communications to reassure other nations, enforce norms, deepen industry engagement and classify information at lower levels.

In 2019, ICPC began a three-year project to improve Australia’s internet using international security standards to secure exchanges of information [4]. Adoption would be voluntary and non-binding, relying on goodwill and incentives. With support from auDA (the policy authority and self-regulatory body for the .au domain), the centre set to work on a public-test tool to validate websites, email accounts and connections against standards that were considered international good practice.

ICPC conducted a scenario exercise to ponder what Australia would face if cyberspace were to fragment and divide [5]. The scenario for 2024 wasn’t a forecast, but considered the end of a single internet, wrecked by tensions between the US, China, Russia and Western Europe. Content and services would be largely inaccessible from outside the same country, region or bloc:

Asia is a contested zone in 2024. The US and China vie for power in the region while Chinese and American firms compete for market share …

On the one hand, countries in the Indo-Pacific enjoy more choice than those in the Western Hemisphere, since the American and Chinese internets are both viable options in this region. Some countries are choosing to bandwagon with China …

On the other hand, innovation in this scenario is not improving global integration. Choosing one internet increasingly means forgoing access to others. Chinese and American cybersecurity standards are not compatible. Nor is compatibility of much interest to the tech giants. Years of national tariffs, investment restrictions, divergent regulations and export controls have limited their sales in the others’ domestic markets.

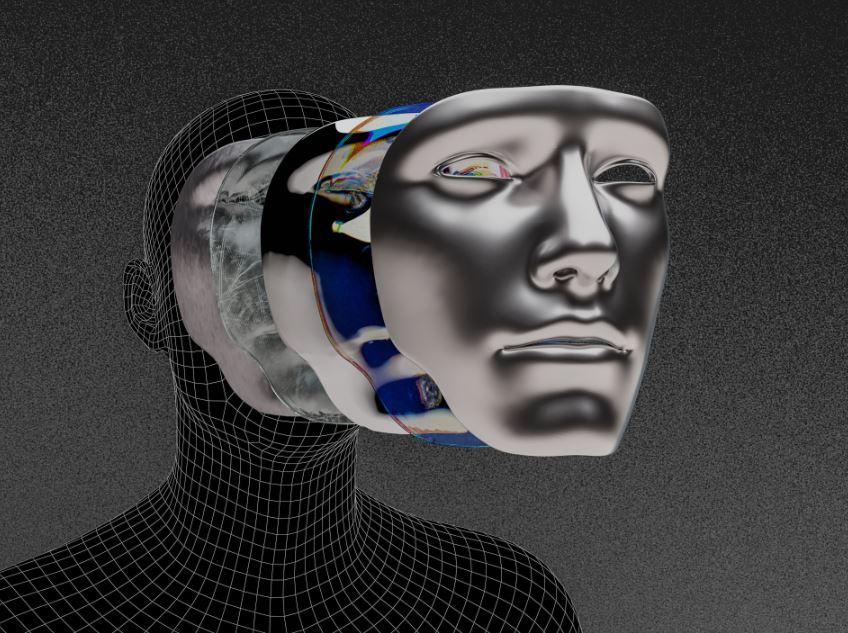

The creation of cheap, realistic forgeries—‘deep fakes’ [6]—could be weaponised by criminals, activists, and countries, Hannah Smith and Katherine Mansted wrote. Technology lowered the costs of information warfare at scale, accelerating propaganda and disinformation, and harming trust in democratic institutions.

Deep fakes are increasingly realistic and easy to make. To illustrate, the foreword [6] to Smith and Mansted’s report was written by a machine, using a deep-fake algorithm— a form of artificial intelligence—to generate text and also a ‘photograph’ or ‘headshot’ of a fake female author. Here’s that foreword, generated in about five minutes using free, open-source software:

Fakes are all around us. Academic analysis suggests that they’re difficult to spot without new sensors, software or other specialised equipment, with 1 in 5 photos you see being fraudulent. The exposure of deep fakes and the services they facilitate can potentially lead to suppression of information and a general breakdown in confidence in public authorities and trust. We need to react not just to false or compromised claims but to those who would try to exploit them for nefarious purposes. We should not assume the existence of fake news unless we have compelling evidence to the contrary, but when we do, we should not allow the propaganda. I’ve never been more sure of this point than today.

The faked picture accompanying those words was of a smiling woman, but the words were accurately attributed to ‘GPT-2 deep learning algorithm’.

When Covid-19 struck, many state and non-state actors went online to exploit the pandemic using ‘disinformation, propaganda, extremist narratives and conspiracy theories’. This activity was monitored by ICPC using its Influence Tracker [7] tool. This machine-learning and analytics capability creates social media datasets. The tool ingests data in multiple languages and auto-translates, producing insights on topics, sentiment, shared content, influential accounts, metrics of impact and posting patterns.

ICPC’s series of reports on Covid-19 disinformation and social media manipulation included:

- analysis of inauthentic activity [8] on Facebook and YouTube, in English and Chinese, to support the political objectives of the Chinese Communist Party: assertions of corruption and incompetence in the Trump administration, the US government’s decision to ban TikTok, the George Floyd and Black Lives Matter protests, and US–China tensions

- mapping of Russian efforts to manipulate [9] information and spread messages about the coronavirus using social media accounts

- a case study showing how to extrapolate from Twitter’s take-down dataset to identify persistent accounts on the periphery of the network and providing a guide on how to identify ‘inauthentic activity’.

By the third decade of the 21st century, the world was ‘at the precipice of another technological and social revolution—the quantum revolution [10]’, an ASPI study said. Quantum-enabled technologies would reshape geopolitics, international cooperation and strategic competition.

Gavin Brennen, Simon Devitt, Tara Roberson and Peter Rohde predicted that countries that mastered ‘quantum technology will dominate the information processing space for decades and perhaps centuries to come, giving them control and influence over sectors such as advanced manufacturing, pharmaceuticals, the digital economy, logistics, national security and intelligence’.

Australia benefited from the 20th-century digital revolution, but missed the chance to play a major role in computing and communications technology. The new era could be different because Australia had a long history of leadership in quantum technology:

As geopolitical competition over critical technologies escalates, we’re also well placed to leverage our quantum capabilities owing to our geostrategic location and alliances with other technologically, economically and militarily dominant powers (most notably the Five Eyes countries) and key partnerships in the Indo-Pacific, including with Japan and India.

Drawn from the book on the institute’s first 20 years: An informed and independent voice: ASPI, 2001–2021 [11].

Article printed from The Strategist: https://aspistrategist.ru

URL to article: /aspis-decades-cyberattacks-deep-fakes-and-the-quantum-revolution/

URLs in this post:

[1] International Cyber Policy Centre: https://www.aspistrategist.ru/program/international-cyber-policy-centre

[2] strategic challenges: /aspis-decades-cybersecurity/

[3] remarkably transparent: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2018-04/Australias%20offensive%20cyber%20capability.pdf?VersionId=ONFm43IrJWsYq2wBL7PlzJI7lbyuVIBO

[4] secure exchanges of information: https://www.aspistrategist.ru/news/building-safer-internet-advocate-validate-educate

[5] fragment and divide: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2018-12/Australias%20cyber%20security%20future.pdf?VersionId=QZ3QsI2bMkzl_6IVmOceEZdm6GWJ5KqD

[6] deep fakes’: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2020-04/Weaponised%20deep%20fakes.pdf?VersionId=lgwT9eN66cRbWTovhN74WI2z4zO4zJ5H

[7] Influence Tracker: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2020-04/COVID-19%20Disinformation%20%26%20Social%20Media%20Manipulation%20Trends%208-15%20April.pdf?LK2mqz3gNQjFRxA21oroH998enBW__5W=

[8] inauthentic activity: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2020-09/Viral%20videos.pdf?VersionId=oRBhvSURmY5drwKr.EbnIZq6eu_87CKh

[9] manipulate: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2020-10/Pro%20Kremlin%20messaging.pdf?VersionId=l_.AmnyfyGO7fhCpUbklvjDy6Le3LfbN

[10] quantum revolution: https://s3-ap-southeast-2.amazonaws.com/ad-aspi/2021-05/Quantum%20revolution-v2.pdf?VersionId=tST6Nx6Z0FEIbFDFXVZ2bxrGp2X8d.iL

[11] An informed and independent voice: ASPI, 2001–2021: https://www.aspistrategist.ru/report/informed-and-independent-voice-aspi-2001-2021

Click here to print.