In my previous post on project timelines, I made the point that defence projects these days are taking longer to deliver than in the past. The reasons are many and varied–and we shouldn’t rule out poor governance—but a significant driver is the sheer complexity of modern systems. By demanding ever higher levels of performance and (especially) integration, it’s taking longer and longer to deliver them.

But there’s an associated question that’s the subject of this post—why aren’t we accurately predicting how long it will take? I’m just finalising a report card for the 2000 Defence White Paper and a striking observation is just how poor the estimates of project delivery timeframes were. I’ll spell out the grizzly details in a forthcoming paper, but the average planned duration of major projects was around 7 years. In 2013, we’re still waiting on a number of them to be delivered, and the best guess at the moment is that the actual average duration will be closer to 13 years—a schedule overrun of more than 90%.

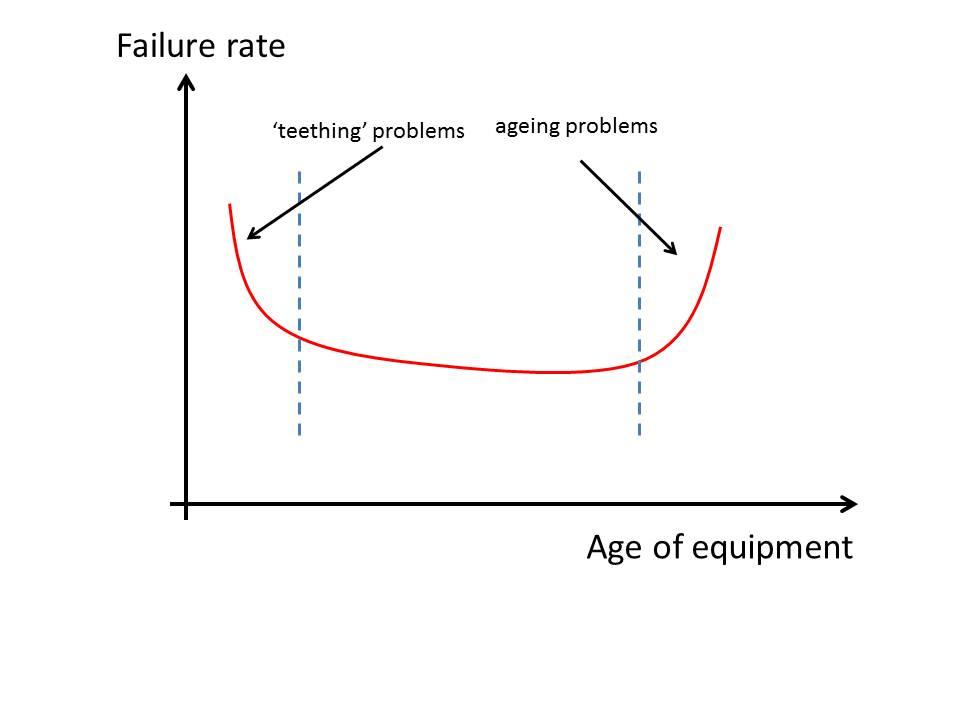

It’s usually cost overruns that make the headlines, but I think there’s a case to be made that schedule delays are actually worse if our ultimate concern is the ADF having equipment that’s fit for purpose. And, of course, it’s true that time is money. By requiring older equipment to stay in service longer, the costs of operating it usually increases. Conventional wisdom says that there’s ‘bathtub curve’ of operating costs—which shows that equipment is most likely to fail frequently either very early in its life or very late, with a ‘sweet spot’ in between—is a reality for the ADF. (See schematic below). In practice, it’s actually the rate of failure of major system components that matters most.

A good example of this phenomenon is the RAAF’s ‘classic’ F/A-18A/B Hornet fleet. Built back in the 1980s, these aircraft have long passed their best years and, although they are still under their design lifetime in flying hours, have already consumed a $3.7 billion upgrade and structural refurbishment program to keep them current. The plan in the 2000 White Paper was to replace them when they ‘reach the end of their service life between 2012 and 2015’. Now the plan is to keep them in service until 2020. This isn’t cheap or risk free. As the National Audit Office observes (PDF):

A good example of this phenomenon is the RAAF’s ‘classic’ F/A-18A/B Hornet fleet. Built back in the 1980s, these aircraft have long passed their best years and, although they are still under their design lifetime in flying hours, have already consumed a $3.7 billion upgrade and structural refurbishment program to keep them current. The plan in the 2000 White Paper was to replace them when they ‘reach the end of their service life between 2012 and 2015’. Now the plan is to keep them in service until 2020. This isn’t cheap or risk free. As the National Audit Office observes (PDF):

[There are] risks inherent in the management of aged combat aircraft. These risks are wide‐ranging and require ongoing, prudent management if the … Hornet fleet [is to remain] capable of satisfying approved operational commitments, while it undergoes aircraft and weapons systems upgrades, airframe structural refurbishments, periodic Deeper Maintenance and Operational (flight‐line) Maintenance. …significant aged‐aircraft issues are resulting in maintenance durations and costs becoming less predictable. Annual spending to sustain the Hornet fleet has averaged $118 million since 2000–01, but is trending towards $170 million per annum over the next several years. The cost of airframe corrosion‐related repairs has also increased significantly, from $721 000 in 2007 to $1.367 million as estimated in 2011.

But it could be worse. At least the Hornets are still flying and their system upgrades have kept them competitive, albeit increasingly short of state of the art. In other areas of capability the ADF has found itself without anything at all—such as dipping sonar on naval helicopters (none since 1996 and not likely to have any before 2016), battlefield airlift (Caribou retired 2009 and replacement not due in service until 2016) and air-to-air refuelling (no capability from 2008 to 2013). In those cases the cost isn’t measured in dollars, but in terms of the ADF’s capability. In turn that impacts negatively on the range of military options available to the government of the day—which translates into additional strategic risk.

In short, no wonder ex-DMO chief Stephen Gumley spent more time worrying about schedule than costs. (Although it defies belief that schedule overruns don’t translate into cost overruns—at the very least there’ll be the additional expense of keeping the staff around for longer.) The question then becomes how we better manage project schedules—if we knew accurately in advance how long something was going to take, then we could factor the costs of that timing into the cost-benefit calculus of the options on the table. Unfortunately, there doesn’t seem to be much prospect of that happening. Despite generations of engineers and analysts working hard to make estimations more rigorous, a conspiracy of optimism still prevails within project circles. Part 3 of this series will take a look at the historical data and some of the current thinking on the subject. In the meantime, Peter Layton will be along this afternoon with some thoughts on managing long project timeframes.

Andrew Davies is the senior analyst for defence capability at ASPI and executive editor of The Strategist.